The State of Search & AI: 2025 Usage and 2026 Predictions

What actually matters when machines do the searching

AI didn’t replace discovery systems. It changed how people interact with them. Most of the industry spent 2025 missing this distinction entirely.

Teams experimented with prompts, dashboards, and new acronyms. AI felt like a new surface that must be “optimised for”—quickly, before someone else figured it out first. Most of this activity was driven by uncertainty rather than evidence. It still is.

(The conference circuit did very well out of it, though.)

That phase will fade. AI stops being novel and starts being ambient. The tools don’t disappear—the anxiety around them does. And once that happens, a quieter truth becomes obvious: the fundamentals that determine whether information is found, trusted, and reused haven’t actually changed.

From experimentation to default behaviour

Right now, AI sits beside existing workflows. People ask it questions they used to type into Google. They test how often their brand appears in answers. They screenshot wins.

But default behaviour looks different from experimentation. When AI becomes infrastructure, users stop exploring and start delegating. Queries get shorter, more decisive, and less forgiving. The intent shifts from “show me options” to “just tell me.”

That change doesn’t reward clever optimisation tricks. It rewards clarity.

When machines have to choose what to retrieve, summarise, or cite, ambiguity becomes a liability. Content that tries to do too many things at once quietly disappears. Content that explains one thing well, from a clear point of view, gets reused—not because it’s clever, but because it’s dependable.

AI-generated answers don’t create a new search system

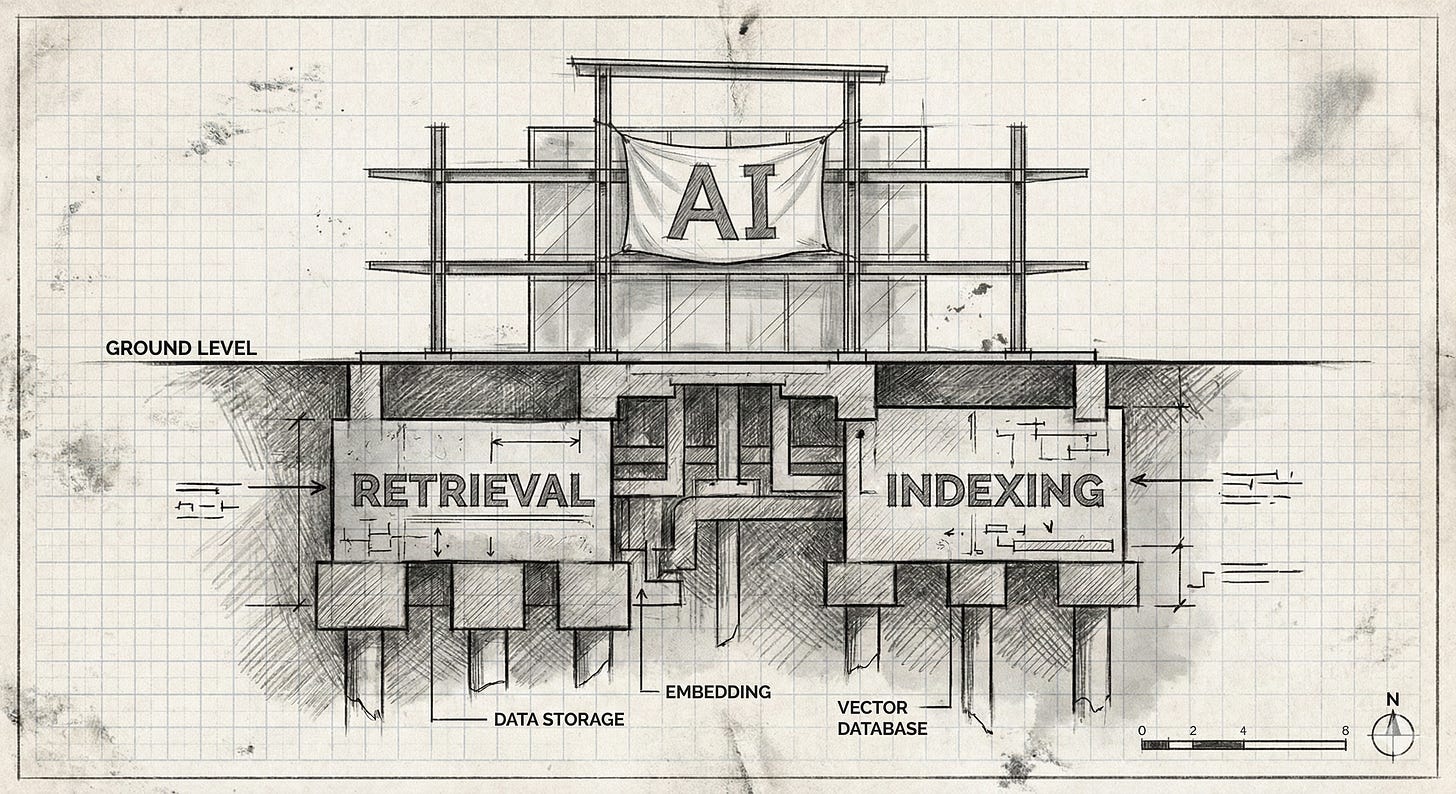

A lot of the “AI Optimisation” discussion assumes we’re dealing with a new ranking logic. In practice, most of the predictable behaviour we see in AI-generated answers comes from retrieval, not generation.

When an AI system needs to ground an answer in external information, it has to retrieve that information first. That retrieval step looks very familiar: indexing, semantic matching, relevance scoring. If content shows up consistently in grounded answers, it’s usually because it satisfies the same conditions that made it discoverable in search engines to begin with.

What confuses people is everything outside that predictable layer.

Ungrounded AI output can mention brands, URLs, or ideas that have little or no search visibility. Those moments feel exciting, but they’re not reproducible. They’re the side effect of probabilistic generation, not evidence of a new authority model. Treating them as strategy is a category error.

It’s also a great way to sell tools.

The future belongs to what’s influenceable, not what’s occasionally visible.

The technical distinction between grounded retrieval and probabilistic generation—and why only one of them is influenceable—is explored in more detail in Visibility in LLMs and AI Overviews.

Content strategy after content becomes cheap

As AI lowers the cost of producing text, it raises the cost of being worth reading—or reusing.

This is where human insight becomes the real differentiator. Not “human tone” or “authentic voice” in the abstract, but actual judgment: what to include, what to exclude, and what you’re willing to stand behind.

Signals like experience, expertise, and credibility matter less because algorithms “care” about them, and more because retrieval systems bias toward sources that are already trusted, well-defined, and internally consistent. Vague content can be generated infinitely. Specific content has friction. That friction is what makes it valuable.

In an AI-saturated web, the winning strategy isn’t to publish more. It’s to publish less, with more conviction.

The strategic shift most teams are missing

The biggest opportunity created by AI isn’t tactical. It’s organisational.

Most companies still treat discoverability as a downstream activity. Something to fix after launch. Something owned by a single team.

That model doesn’t scale when machines, not just humans, are your primary audience.

Teams that perform well in AI-driven discovery environments tend to share a few traits:

Discoverability is considered during product and content design, not after the fact

Information is structured consistently across the organisation

Entities, terminology, and positioning are stable, not improvised

Measurement focuses on what’s knowable, not what’s fashionable

This isn’t “AI strategy.” It’s the work that should have been happening all along—and the organisations doing it don’t need a new acronym to justify it.

For a deeper critique of why most “AI strategies” collapse under scrutiny—and why this is fundamentally an organisational problem, not a tooling one—see Your AI Strategy Isn’t a Strategy.

A grounded outlook for 2026

What persists: Retrieval infrastructure. Structured content. Organisational discipline around discoverability. The boring, foundational work that compounds regardless of which interface sits on top of it.

What doesn’t: The chatbot on your pricing page that confidently invents discount codes. The “ask our AI” widget nobody asked for. The assumption that adding a conversational layer improves an experience that worked fine as a list.

2026 is when the AI graveyard starts filling up—quietly, without retrospectives, and with a lot of “we’re refocusing on core functionality” in the changelogs.

The conversation around AI and search will get less noisy and more honest. The industry will stop chasing interfaces and start respecting systems. Organic search won’t disappear—it becomes the stabilising layer that AI relies on.

The future of organic search in an AI-first world isn’t about learning how to talk to machines differently. It’s about making sure there’s something coherent, accurate, and worth retrieving when they do the talking.